Dynamics-Guided Diffusion Model

for Sensor-less Robot Manipulator Design

Task-specific Designs without Task-specific Training

We present Dynamics-Guided Diffusion Model (DGDM), a data-driven framework for generating task-specific manipulator designs without task-specific training. Given object shapes and task specifications, DGDM generates sensor-less manipulator designs that can blindly manipulate objects towards desired motions and poses using an open-loop parallel motion. This framework 1) flexibly represents manipulation tasks as interaction profiles, 2) represents the design space using a geometric diffusion model, and 3) efficiently searches this design space using the gradients provided by a dynamics network trained without any task information. We evaluate DGDM on various manipulation tasks ranging from shifting/rotating objects to converging objects to a specific pose. Our generated designs outperform optimization-based and unguided diffusion baselines relatively by 31.5% and 45.3% on average success rate. With the ability to generate a new design within 0.8s, DGDM facilitates rapid design iteration and enhances the adoption of data-driven approaches for robot mechanism design.

Technical Summary Video (with audio)

Qualitative Results

Pose ConvergenceThe goal of pose convergence is to design fingers that always reorient a target object to a specified orientation (in the manipulator frame) when closing the gripper in parallel. As you can imagine, this manipulator can be quite useful in industrial settings such as assembly lines. When objects are fed in with different poses we can automatically align them to the same pose, and move objects to any particular configuration combined with a global transformation of the gripper.

More examples of pose convergence manipulators in the real world:

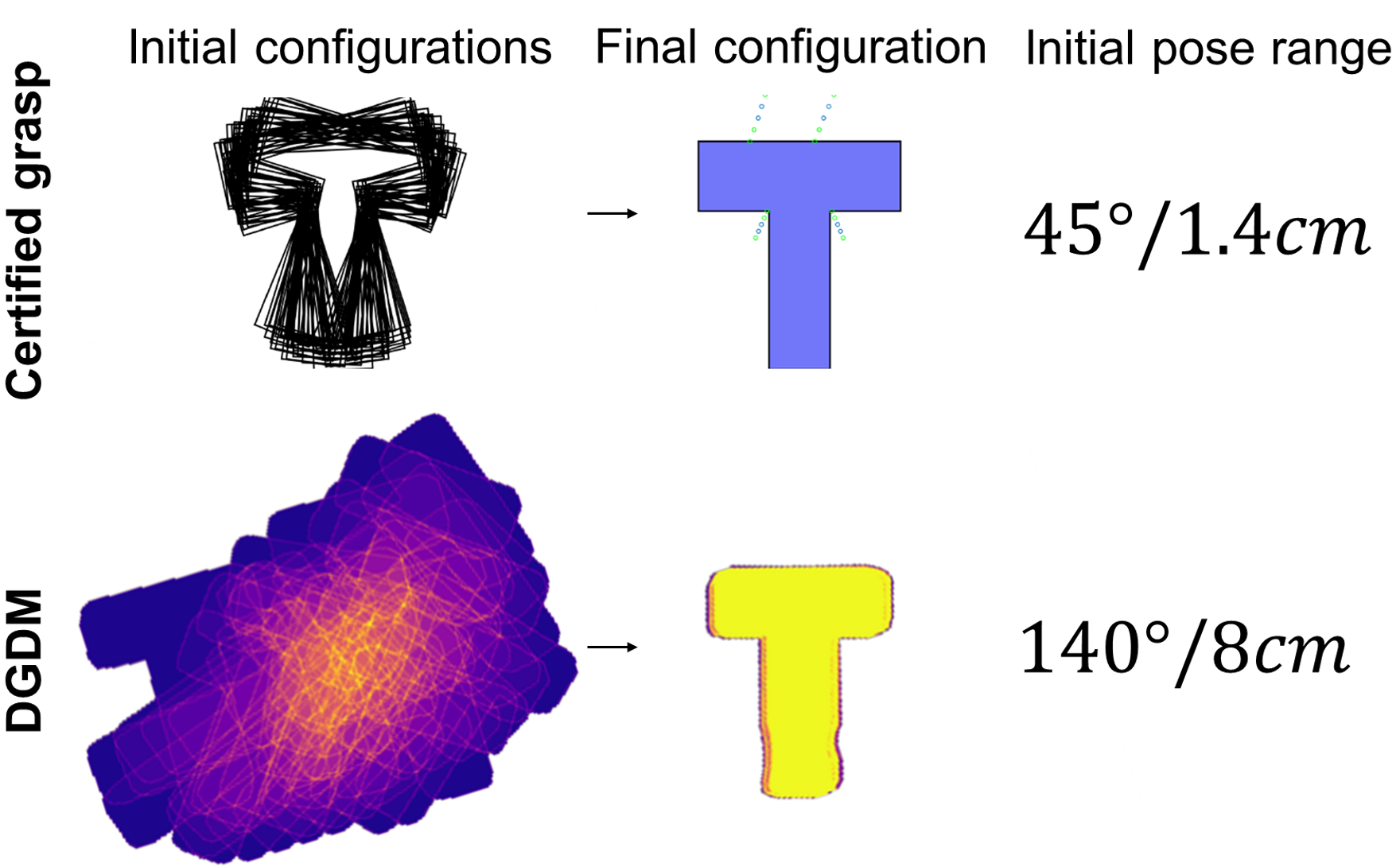

We added a comparison to certified grasping for the convergence task. This method achieves convergence through trajectory optimization instead of manipulator design optimization. However, with a more complex system (two 7 DoF arms and two parallel jaw grippers), their system can only achieve a much smaller convergence range (45 degrees, 1.4 cm) than ours (140 degrees, 8 cm) on the T object. This method can only work for this one convergence task on polygon object shapes, while our method could be used for different objectives and a wider range of objects.

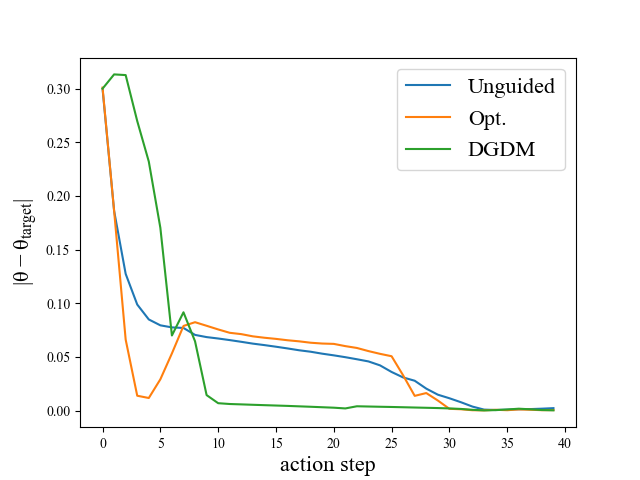

We plot the progress of convergence over 40 open-close actions with manipulators generated by baselines and DGDM. The Unguided baseline converges the slowest, taking more than 30 steps. The Opt. baseline exhibits an unstable dip at the start and only achieves consistent alignment with the target orientation after 30 steps. Manipulators generated by DGDM not only have larger convergence ranges but also converge faster (with 10 steps) than the baselines.

Shift Up/Down

Shift Left/Right

Multi-object Results

Our framework also allows designing manipulators for a set of objects to achieve a task.Rotate Clockwise

Details on Interaction Profile

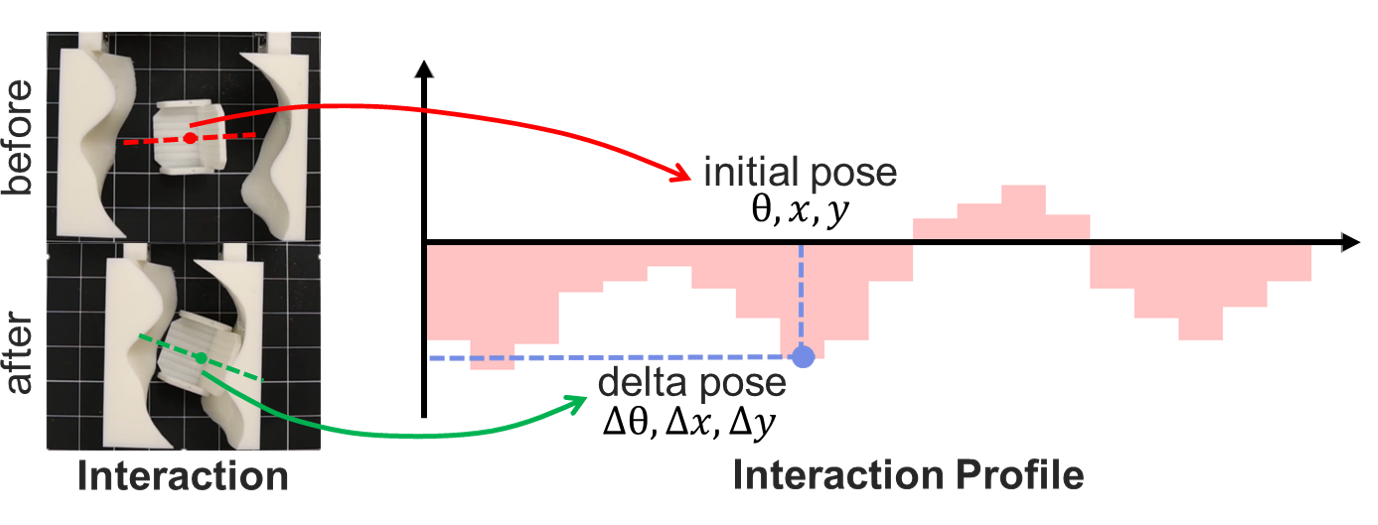

Interaction ProfileThe interaction profile between a manipulator and an object is defined as the distribution of the delta pose change of the object caused by the manipulator-object interaction over all possible initial poses of the object. Here, the manipulators-object interaction is a simple parallel closing action, and the object pose is represented as 2D translation and rotation. For example, in the figure below, when the object's initial pose is \( (\theta,x,y) \), its delta pose after the interaction is \( (\Delta\theta,\Delta x,\Delta y) \), which provide one data point on the interaction profile. Then, by uniformly sampling all initial poses, we obtain the complete interact profile.

In our implementation, we use simulation to obtain the ground truth interaction profile and train a dynamic network to infer the predicted interaction profile given any manipulator-object pair without simulation.

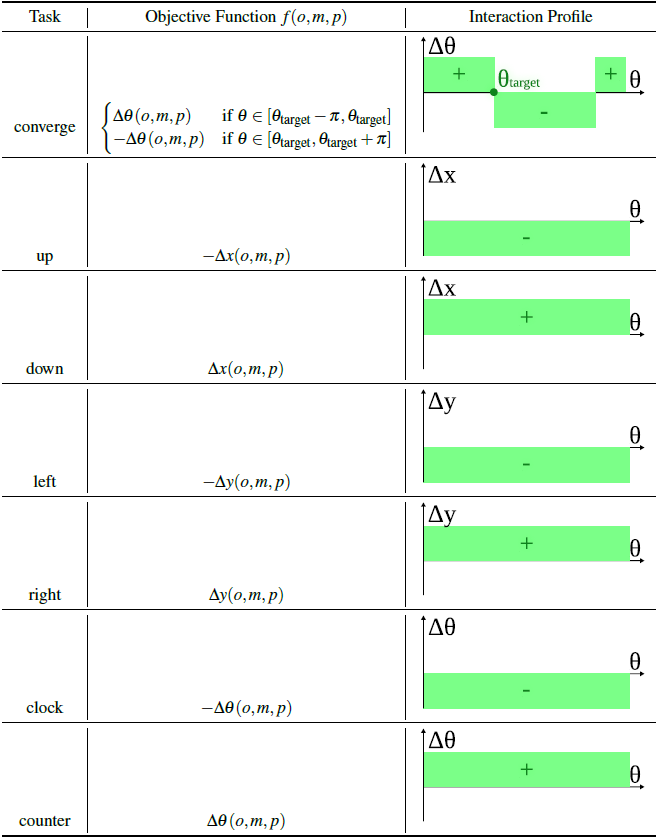

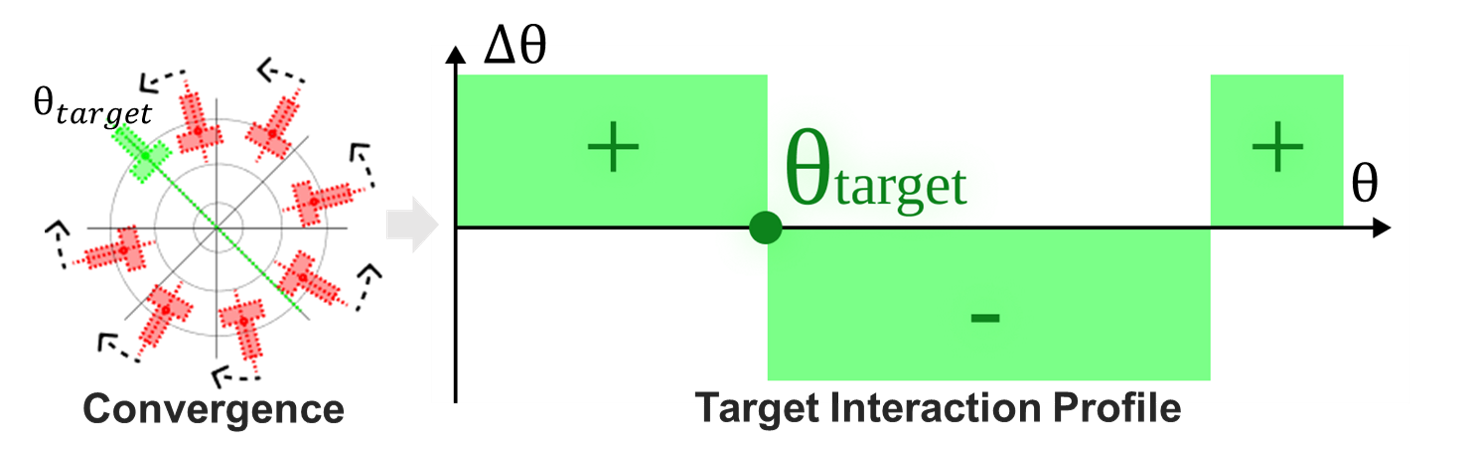

Target Interaction Profile

A target interaction profile is defined for a specific task objective. For example, the target interaction profile for the convergence task is shown in the below figure. This interaction profile indicates the object should rotate in a positive direction when its initial orientation is smaller than the convergence rotation; otherwise, it rotates in a negative direction.

Target v.s. Predicted Interaction Profile

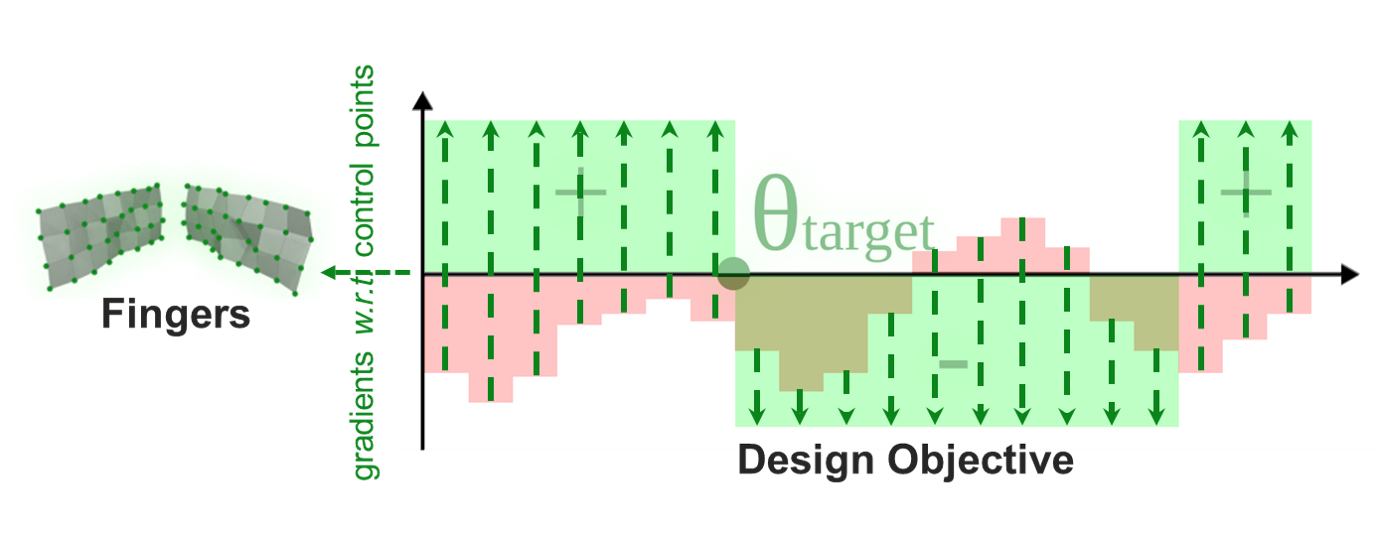

The difference between the target interaction profile and the predicted interaction profile (inferred by the dynamics network) provides the gradients for the manipulator design (in the below figure). Since the dynamics network is fully differentiable, we can get its gradient with respect to the input manipulator geometry.

List of Target Interaction Profile Plots

We provide a comprehensive list of task objectives and their corresponding interaction plots. For visualization purposes, we simplify the horizontal axes of interaction profile plots to include only initial orientations \(\theta\).